bit-tech: Nvidia has PhysX, and Intel bought Havok, so what is AMD now doing in terms of physics and other future technologies such as 3D?

RH: Well, there are two thrusts to this: the first thing is that we are very much the open standards company, we really go down this route all the time. So while we're [still] working with Havok, we're doing this through OpenCL and we do it with other open things as well - we do it with DirectCompute is another reasonable way to go too. The point being is that if we have this implementation with Havok, it will also work with anyones OpenCL driver - that includes Intel and Nvidia - which is a better way to go for the consumer. On top of the Havok work, we are also working with Bullet Physics, which has a GPU accelerated version underway, and I believe Nvidia are working with them too. That's primarily owned by Sony so it's used more on console games than PC games.

Finally there's a third company involved called Pixelux, who created some beautiful DMM - digital molecular matter - that was used in Star Wars: The Force Unleashed. It has beautiful physics simulation that uses a lot of CPU power, but it's also perfect for accelerating on the GPU. Once again we will try as hard as we can through open standards so OpenCL is the preferred route for that kind of stuff.

So when it comes to getting it on the GPU - we are very serious about it and as long as Havok are allowed to work with us, we'll work with them. If for some reason they decide that Larrabee is going to be a success and not can it so we're going to shut you out, then there's clearly nothing we can do about that.

bit-tech: Well Larrabee's a part of an SDV product only at the moment-

RH: Larrabee's in flux shall we say. Let's be polite to Intel.

Intel recently announced it was shelving plans to make Larrabee hardware

bit-tech: Have you seen anyone that was previously committed to Intel come back to you recently?

I don't think Intel has finished making up its mind about Larrabee yet, and I've got a couple of reasons for that one is public and the other is a private conversation I cannot comment on. I think they will spend time considering how big an investment they've flushed away on Larrabee and also that only two companies have a good history in making GPUs - even we struggle with it, it's not easy. Simply saying that we've got an architecture that we are going to bend into shape for this is not necessarily a brilliant plan. You can't simply take 20 engines from mini-Metros and shove them into a sports car to make something that's colossally fast, it's no way to go about it.

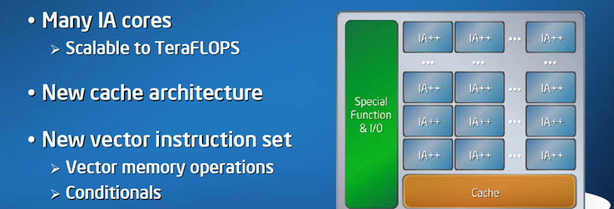

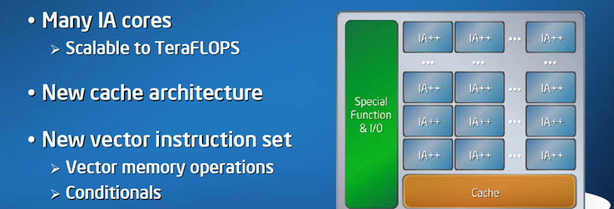

bit-tech: Given Intel's approach to using Intel Architecture (IA) in Larrabee, and as an x86 company yourself, do you think it's because Intel are using IA specifically that it's the problem?

RH: They really have a whole host of problems: some of which I wouldn't want to describe in too much detail because it points them in the right direction and they've got their own engineers to see that. The x86 instruction set is absolutely not ideal for graphics and by insisting on supporting the whole of that instruction set they have silicon which is sitting around doing nothing for a significant amount of time.

By building an in-order CPU core - which is what they are doing on Larrabee - they are going to get pretty poor serial performance. When the code is not running completely parallel their serial performance will be pretty dismal compared to modern CPUs. As far as I can tell they haven't done a fantastic job of latency hiding either - it's hyperthreaded like mad and has a huge, totally conventional CPU cache. Well it shouldn't come as a big surprise that it's simply not what we do. Our GPU caches simply don't work like CPU caches and they are for flushing data out of the pipeline at one end and preparing it to be inserted at the other - a read and write cache to keep all the GPU cores filled. One large cache and lots of local caches for the cores is not a great design. On top of which it doesn't actually have enough fixed function hardware to take on the problem as it's set out at the moment, so it needs to be rearchitected if Intel is to have a decent chance of competing.

bit-tech: IF - given we don't know yet - when Nvidia's Fermi cards finally arrive they are faster than your HD 5970-

RH: -Well if it's not faster and it's 50 per cent bigger, then they've done something wrong-

bit-tech: -well if it is then, do you have something else up your sleeve to compete?

RH: Let me say that I suspect that through 2010 it's possible there will be moments where we don't have the fastest graphics card, but I expect that for the majority of 2010 we'll have the fastest card you can buy. I've good reason for believing that but I can't talk about unannounced products or any of the stuff we're doing to secure that. What I can say in reference to what I said earlier: we're working on two thrusts of physics which are very important and also the three companies on enabling GPU physics-

bit-tech: -so we'll see AMD GPU physics in 2010?

RH: Bullet should be available certainly in 2010, yes. At the very least for ISVs to work with to get stuff ready.

The other thing is that all these CPU cores we have are underutilised and I'm going to take another pop at Nvidia here. When they bought Ageia, they had a fairly respectable multicore implementation of PhysX. If you look at it now it basically runs predominantly on one, or at most, two cores. That's pretty shabby! I wonder why Nvidia has done that? I wonder why Nvidia has failed to do all their QA on stuff they don't care about - making it run efficiently on CPU cores - because the company doesn't care about the consumer experience it just cares about selling you more graphics cards by coding it so the GPU appears faster than the CPU.

It's the same thing as Intel's old compiler tricks that it used to do; Nvidia simply takes out all the multicore optimisations in PhysX. In fact, if coded well, the CPU can tackle most of the physics situations presented to it. The emphasis we're seeing on GPU physics is an over-emphasis that comes from one company having GPU physics... promoting PhysX as if it's Gods answer to all physics problems, when actually it's more a solution in search of problems.

bit-tech: OK finally, the question we often get asked by our readers is: If someone owns a HD 3870 or a GeForce 8800 series graphics card, why should they upgrade to the latest HD 5700 or 5800 series products when these cards still play most games well enough?

RH: First of all there's the DirectX 11 graphics - BattleForge runs 30 per cent faster at maximum settings, running on DirectX 11 hardware using the DirectX 11 path then it does on the same piece of hardware on the DirectX 10 path. DirectX 11 is inherently efficient faster at rendering exactly the same pixels.

Now if your HD 3870 already gives you an acceptable frame rate on the games you buy then, yes, you don't need to upgrade it. I'm not trying to suggest everyone upgrades even if they don't need to, but if you play the current and upcoming games and you try the experience of something like Eyefinity, then I think you'll find you have a gorgeously immersive experience. I love Eyefinity and I despite the fact I was initially a sceptic when it was being designed and first made - I wondered where there would be enough rendering horsepower in a HD 5850 or 5870 and if it could make that much difference, but if you play it for an hour or so then you can see it's a tangible advantage.

We set up a LAN game in the US about a month back with a competition that included a bunch of Eyefinity systems compared to non-Eyefinity systems, but other than that they were otherwise identical, and we just let people play and compete to see what their scores were in games. Eyefinity's big advantage was that we found it was better on average for the players, whether it was a racing game or shooting game.

If your HD 3870 works - then great, stick with it for now, but make sure you keep updating the Catalyst drivers regularly because we keep optimising and improving the experience even for older hardware. If you want the best experience though, go for a high performance but good value HD 5850 or something similar.

bit-tech: I bet you must sell plenty because we keep getting asked where can we find a HD 5850 all the time.

RH: Actually we're working really hard on that supply and the numbers are going up quite dramatically - we've sold over 800,000 units of DirectX 11 hardware already-

bit-tech: -Are you going to have the millionth sale as someone wins a free Eyefinity kit?

RH: Ha! Well the trouble is we don't sell them directly so we couldn't identify the millionth, but I expect when we see our sales records roll over that mark we'll definitely make some noise about that though.

RH: Well, there are two thrusts to this: the first thing is that we are very much the open standards company, we really go down this route all the time. So while we're [still] working with Havok, we're doing this through OpenCL and we do it with other open things as well - we do it with DirectCompute is another reasonable way to go too. The point being is that if we have this implementation with Havok, it will also work with anyones OpenCL driver - that includes Intel and Nvidia - which is a better way to go for the consumer. On top of the Havok work, we are also working with Bullet Physics, which has a GPU accelerated version underway, and I believe Nvidia are working with them too. That's primarily owned by Sony so it's used more on console games than PC games.

Finally there's a third company involved called Pixelux, who created some beautiful DMM - digital molecular matter - that was used in Star Wars: The Force Unleashed. It has beautiful physics simulation that uses a lot of CPU power, but it's also perfect for accelerating on the GPU. Once again we will try as hard as we can through open standards so OpenCL is the preferred route for that kind of stuff.

So when it comes to getting it on the GPU - we are very serious about it and as long as Havok are allowed to work with us, we'll work with them. If for some reason they decide that Larrabee is going to be a success and not can it so we're going to shut you out, then there's clearly nothing we can do about that.

bit-tech: Well Larrabee's a part of an SDV product only at the moment-

RH: Larrabee's in flux shall we say. Let's be polite to Intel.

Intel recently announced it was shelving plans to make Larrabee hardware

bit-tech: Have you seen anyone that was previously committed to Intel come back to you recently?

I don't think Intel has finished making up its mind about Larrabee yet, and I've got a couple of reasons for that one is public and the other is a private conversation I cannot comment on. I think they will spend time considering how big an investment they've flushed away on Larrabee and also that only two companies have a good history in making GPUs - even we struggle with it, it's not easy. Simply saying that we've got an architecture that we are going to bend into shape for this is not necessarily a brilliant plan. You can't simply take 20 engines from mini-Metros and shove them into a sports car to make something that's colossally fast, it's no way to go about it.

bit-tech: Given Intel's approach to using Intel Architecture (IA) in Larrabee, and as an x86 company yourself, do you think it's because Intel are using IA specifically that it's the problem?

RH: They really have a whole host of problems: some of which I wouldn't want to describe in too much detail because it points them in the right direction and they've got their own engineers to see that. The x86 instruction set is absolutely not ideal for graphics and by insisting on supporting the whole of that instruction set they have silicon which is sitting around doing nothing for a significant amount of time.

By building an in-order CPU core - which is what they are doing on Larrabee - they are going to get pretty poor serial performance. When the code is not running completely parallel their serial performance will be pretty dismal compared to modern CPUs. As far as I can tell they haven't done a fantastic job of latency hiding either - it's hyperthreaded like mad and has a huge, totally conventional CPU cache. Well it shouldn't come as a big surprise that it's simply not what we do. Our GPU caches simply don't work like CPU caches and they are for flushing data out of the pipeline at one end and preparing it to be inserted at the other - a read and write cache to keep all the GPU cores filled. One large cache and lots of local caches for the cores is not a great design. On top of which it doesn't actually have enough fixed function hardware to take on the problem as it's set out at the moment, so it needs to be rearchitected if Intel is to have a decent chance of competing.

bit-tech: IF - given we don't know yet - when Nvidia's Fermi cards finally arrive they are faster than your HD 5970-

RH: -Well if it's not faster and it's 50 per cent bigger, then they've done something wrong-

bit-tech: -well if it is then, do you have something else up your sleeve to compete?

RH: Let me say that I suspect that through 2010 it's possible there will be moments where we don't have the fastest graphics card, but I expect that for the majority of 2010 we'll have the fastest card you can buy. I've good reason for believing that but I can't talk about unannounced products or any of the stuff we're doing to secure that. What I can say in reference to what I said earlier: we're working on two thrusts of physics which are very important and also the three companies on enabling GPU physics-

bit-tech: -so we'll see AMD GPU physics in 2010?

RH: Bullet should be available certainly in 2010, yes. At the very least for ISVs to work with to get stuff ready.

The other thing is that all these CPU cores we have are underutilised and I'm going to take another pop at Nvidia here. When they bought Ageia, they had a fairly respectable multicore implementation of PhysX. If you look at it now it basically runs predominantly on one, or at most, two cores. That's pretty shabby! I wonder why Nvidia has done that? I wonder why Nvidia has failed to do all their QA on stuff they don't care about - making it run efficiently on CPU cores - because the company doesn't care about the consumer experience it just cares about selling you more graphics cards by coding it so the GPU appears faster than the CPU.

It's the same thing as Intel's old compiler tricks that it used to do; Nvidia simply takes out all the multicore optimisations in PhysX. In fact, if coded well, the CPU can tackle most of the physics situations presented to it. The emphasis we're seeing on GPU physics is an over-emphasis that comes from one company having GPU physics... promoting PhysX as if it's Gods answer to all physics problems, when actually it's more a solution in search of problems.

bit-tech: OK finally, the question we often get asked by our readers is: If someone owns a HD 3870 or a GeForce 8800 series graphics card, why should they upgrade to the latest HD 5700 or 5800 series products when these cards still play most games well enough?

RH: First of all there's the DirectX 11 graphics - BattleForge runs 30 per cent faster at maximum settings, running on DirectX 11 hardware using the DirectX 11 path then it does on the same piece of hardware on the DirectX 10 path. DirectX 11 is inherently efficient faster at rendering exactly the same pixels.

Now if your HD 3870 already gives you an acceptable frame rate on the games you buy then, yes, you don't need to upgrade it. I'm not trying to suggest everyone upgrades even if they don't need to, but if you play the current and upcoming games and you try the experience of something like Eyefinity, then I think you'll find you have a gorgeously immersive experience. I love Eyefinity and I despite the fact I was initially a sceptic when it was being designed and first made - I wondered where there would be enough rendering horsepower in a HD 5850 or 5870 and if it could make that much difference, but if you play it for an hour or so then you can see it's a tangible advantage.

We set up a LAN game in the US about a month back with a competition that included a bunch of Eyefinity systems compared to non-Eyefinity systems, but other than that they were otherwise identical, and we just let people play and compete to see what their scores were in games. Eyefinity's big advantage was that we found it was better on average for the players, whether it was a racing game or shooting game.

If your HD 3870 works - then great, stick with it for now, but make sure you keep updating the Catalyst drivers regularly because we keep optimising and improving the experience even for older hardware. If you want the best experience though, go for a high performance but good value HD 5850 or something similar.

bit-tech: I bet you must sell plenty because we keep getting asked where can we find a HD 5850 all the time.

RH: Actually we're working really hard on that supply and the numbers are going up quite dramatically - we've sold over 800,000 units of DirectX 11 hardware already-

bit-tech: -Are you going to have the millionth sale as someone wins a free Eyefinity kit?

RH: Ha! Well the trouble is we don't sell them directly so we couldn't identify the millionth, but I expect when we see our sales records roll over that mark we'll definitely make some noise about that though.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.