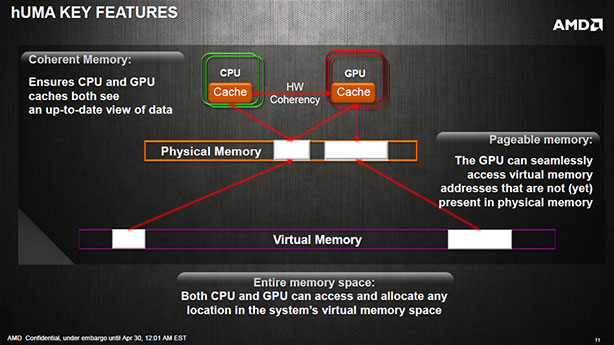

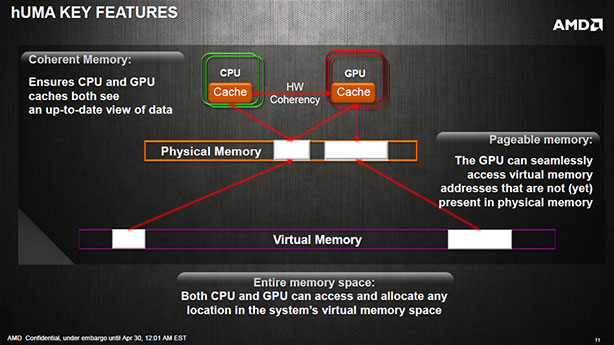

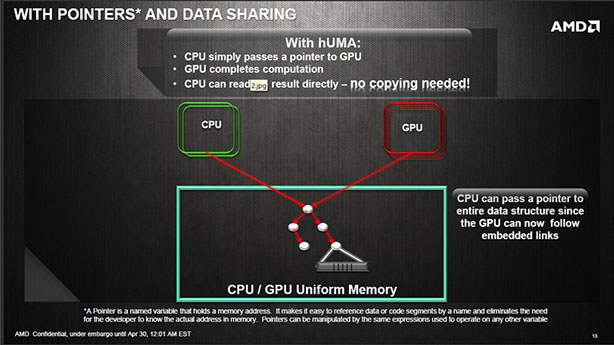

AMD has provided a few more details about its upcoming Heterogeneous System architecture (HSA), revealing the name of the unified memory system it will be using: Heterogeneous Unified Memory Access (hUMA).

HSA is AMD's big vision for its future APUs. Like its existing APUs, HSA chips will feature a CPU and GPU on one piece of silicon but the big innovation with HSA is that the two units will now share memory directly, thus hUMA.

On current AMD and Intel APUs the CPU and GPU have separate memory blocks. So for the GPU to do some processing it requires the appropriate data to be copied from the CPU memory to the GPU memory, and back again once the processing is finished. This creates a severe bottleneck in performance and greatly increases complexity for programmers.

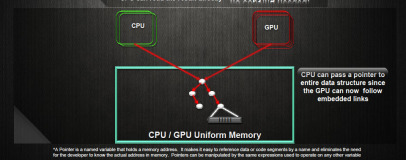

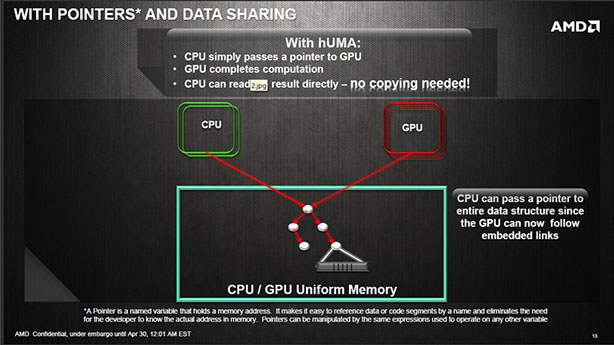

By unifying the two blocks of memory and allowing the CPU and GPU to directly access the same data the performance overhead of copying all the data is eliminated and programming complexity is greatly reduced.

AMD highlighted what it sees as the top ten benefits of HSA in a recent presentation:

Some of these bullet points are clearly digs at Nvidia's current system for GPU accelerated programming, CUDA, which uses a software layer to interpret simple programmer input and automatically handle the complication of memory management (among other things). HSA shouldn't require this software layer.

HUMA is essentially just a bit of branding that refers to the single memory address space the company's upcoming HSA APUs will be using. It harks back to the Unified Memory Access nomenclature of early multicore CPUs - where each CPU core started to share the same memory - adding in heterogeneous in reference to HSA.

HSA isn't just an AMD project, though, it is centred around the HSA Foundation "whose goal is to make it easy to program for parallel computing." The foundation includes such other high profile members as ARM, Qualcomm and Samsung.

The arrival of HSA is still some way off, with the first AMD chips set to use the architecture expected to arrive early next year. However, the PlayStation 4 is expected to feature an HSA type processor, so we'll see some indication of what we can look forward to when that console arrives in Q4 this year.

HSA is AMD's big vision for its future APUs. Like its existing APUs, HSA chips will feature a CPU and GPU on one piece of silicon but the big innovation with HSA is that the two units will now share memory directly, thus hUMA.

On current AMD and Intel APUs the CPU and GPU have separate memory blocks. So for the GPU to do some processing it requires the appropriate data to be copied from the CPU memory to the GPU memory, and back again once the processing is finished. This creates a severe bottleneck in performance and greatly increases complexity for programmers.

By unifying the two blocks of memory and allowing the CPU and GPU to directly access the same data the performance overhead of copying all the data is eliminated and programming complexity is greatly reduced.

AMD highlighted what it sees as the top ten benefits of HSA in a recent presentation:

- Much easier for programmers

- No need for special APIs

- Move CPU multi-core algorithms to the GPU without recoding for absence of coherency

- Allow finer grained data sharing than software coherency

- Implement coherency once in hardware, rather than N times in different software stacks

- Prevent hard to debug errors in application software

- Operating systems prefer hardware coherency - they do not want the bug reports to the platform

- Probe filters and directories will maintain power efficiency

- Full coherency opens the doors to single source, native and managed code programming for heterogeneous platforms

- Optimal architecture for heterogeneous computing on APUs and SOCs.

Some of these bullet points are clearly digs at Nvidia's current system for GPU accelerated programming, CUDA, which uses a software layer to interpret simple programmer input and automatically handle the complication of memory management (among other things). HSA shouldn't require this software layer.

HUMA is essentially just a bit of branding that refers to the single memory address space the company's upcoming HSA APUs will be using. It harks back to the Unified Memory Access nomenclature of early multicore CPUs - where each CPU core started to share the same memory - adding in heterogeneous in reference to HSA.

HSA isn't just an AMD project, though, it is centred around the HSA Foundation "whose goal is to make it easy to program for parallel computing." The foundation includes such other high profile members as ARM, Qualcomm and Samsung.

The arrival of HSA is still some way off, with the first AMD chips set to use the architecture expected to arrive early next year. However, the PlayStation 4 is expected to feature an HSA type processor, so we'll see some indication of what we can look forward to when that console arrives in Q4 this year.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.