Nvidia has announced its intentions to release a combined CPU and GPU product, using its ARM licence to produce a system-on-chip design under the codename Project Denver.

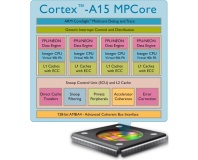

Announced at CES, the project is clearly an extension of the company's current Tegra ARM-based CPUs, which will get an upgrade to ARM's Cortex-A15 processor design in the near future. This time, however, they won't be powering mobile devices. Instead, the company is looking to encroach on rivals AMD and Intel with chips for PCs, servers and even supercomputers.

Speaking to press at the Consumer Electronics Show, Nvidia chief Jen-Hsun Huang described ARM as 'the fastest-growing CPU architecture in history,' and explained that Nvidia is looking to ride the wave by 'designing a high-performing ARM CPU core in combination with our massively parallel GPU cores to create a new class of processor.'

Although firm details of Project Denver are still being kept under wraps, the SoC design will combine a multi-core ARM CPU with a GeForce GPU. Nvidia's mention of server and supercomputer uses for the Denver chips also suggests that the company will be producing boards capable of accepting multiple Denver chips, producing many-core server systems capable of CPU and GPGPU processing.

The move marks a sea-change from Huang's stance in 2007, when he firmly denied that Nvidia would be launching a CPU, but provides a much-needed response to criticisms in 2009 from Intel chief Paul Otellini, who claimed that Nvidia couldn't compete without a CPU of its own.

Currently, ARM is the most popular architecture in the world of smartphones and embedded systems, vastly outselling rival x86 designs from companies such as Intel, AMD and VIA. However, the architecture has been under-represented in the desktop and server markets, largely because of a lack of support in mainstream operating systems.

That's all set to change, though, as Microsoft has also confirmed the rumours that the next release of Windows would be made available for both ARM and x86 platforms, along with an ARM-compatible release of Microsoft Office.

With Microsoft backing the architecture, 2011 could prove a good year for ARM, while also potentially signalling the end of the x86 monopoly on the desktop.

Are you pleased to see more companies investigating the possibilities for ARM in the desktop and server markets, or do you think too much has been invested in the development of x86 to switch architectures now? Share your thoughts over in the forums.

Announced at CES, the project is clearly an extension of the company's current Tegra ARM-based CPUs, which will get an upgrade to ARM's Cortex-A15 processor design in the near future. This time, however, they won't be powering mobile devices. Instead, the company is looking to encroach on rivals AMD and Intel with chips for PCs, servers and even supercomputers.

Speaking to press at the Consumer Electronics Show, Nvidia chief Jen-Hsun Huang described ARM as 'the fastest-growing CPU architecture in history,' and explained that Nvidia is looking to ride the wave by 'designing a high-performing ARM CPU core in combination with our massively parallel GPU cores to create a new class of processor.'

Although firm details of Project Denver are still being kept under wraps, the SoC design will combine a multi-core ARM CPU with a GeForce GPU. Nvidia's mention of server and supercomputer uses for the Denver chips also suggests that the company will be producing boards capable of accepting multiple Denver chips, producing many-core server systems capable of CPU and GPGPU processing.

The move marks a sea-change from Huang's stance in 2007, when he firmly denied that Nvidia would be launching a CPU, but provides a much-needed response to criticisms in 2009 from Intel chief Paul Otellini, who claimed that Nvidia couldn't compete without a CPU of its own.

Currently, ARM is the most popular architecture in the world of smartphones and embedded systems, vastly outselling rival x86 designs from companies such as Intel, AMD and VIA. However, the architecture has been under-represented in the desktop and server markets, largely because of a lack of support in mainstream operating systems.

That's all set to change, though, as Microsoft has also confirmed the rumours that the next release of Windows would be made available for both ARM and x86 platforms, along with an ARM-compatible release of Microsoft Office.

With Microsoft backing the architecture, 2011 could prove a good year for ARM, while also potentially signalling the end of the x86 monopoly on the desktop.

Are you pleased to see more companies investigating the possibilities for ARM in the desktop and server markets, or do you think too much has been invested in the development of x86 to switch architectures now? Share your thoughts over in the forums.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.