Graphics cards are inherently good at parallel processing tasks: it's long been considered true, but support for the theory has come from an unlikely source - CPU manufacturer Intel.

As reported over on iTworld, the chip giant set out to disprove the myth that GPUs offer a 100x speed boost in parallel processing tasks over a CPU - in other words, attempting to continue selling its top-end CPUs rather than seeing its high-performance computing clusters move to GPU-based supercomputers such as the FASTRA II.

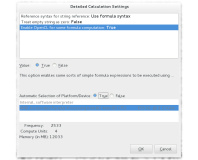

While Intel successfully debunked the myth that moving your parallel processing tasks onto the GPU via CUDA or OpenCL would net you a 100x performance boost, it failed to show that there was no performance boost. Rather, the final figures demonstrated that the Nvidia GeForce GTX 280 used in the test out-performed the Core i7 960 3.2GHz processor by a margin of 2.5x on average - with certain functions running up to fourteen times faster on the GPU than the CPU.

That's an embarrassing result for Intel - and doubly so when you realise that the graphics card used was released almost exactly two years ago in June 2008, whereas the CPU is from October 2009.

Nvidia, naturally, is crowing about the test results, with the company's general manager of GPU computing Andy Keane blogging "it's a rare day in the world of technology when a company you compete with stands up at an important conference and declares that your technology is only up to 14 times faster than theirs."

Despite Intel's testing results, the day when we can ditch our CPU is far from here quite yet: while GPUs show great improvements in massively parallel tasks, a lot of day-to-day computing is serial in nature - and thus runs faster on a CPU.

Are you shocked to see that an elderly Nvidia graphics card can beat one of Intel's more recent processors, or did you always know that GPGPU computing was the future? Share your thoughts over in the forums.

As reported over on iTworld, the chip giant set out to disprove the myth that GPUs offer a 100x speed boost in parallel processing tasks over a CPU - in other words, attempting to continue selling its top-end CPUs rather than seeing its high-performance computing clusters move to GPU-based supercomputers such as the FASTRA II.

While Intel successfully debunked the myth that moving your parallel processing tasks onto the GPU via CUDA or OpenCL would net you a 100x performance boost, it failed to show that there was no performance boost. Rather, the final figures demonstrated that the Nvidia GeForce GTX 280 used in the test out-performed the Core i7 960 3.2GHz processor by a margin of 2.5x on average - with certain functions running up to fourteen times faster on the GPU than the CPU.

That's an embarrassing result for Intel - and doubly so when you realise that the graphics card used was released almost exactly two years ago in June 2008, whereas the CPU is from October 2009.

Nvidia, naturally, is crowing about the test results, with the company's general manager of GPU computing Andy Keane blogging "it's a rare day in the world of technology when a company you compete with stands up at an important conference and declares that your technology is only up to 14 times faster than theirs."

Despite Intel's testing results, the day when we can ditch our CPU is far from here quite yet: while GPUs show great improvements in massively parallel tasks, a lot of day-to-day computing is serial in nature - and thus runs faster on a CPU.

Are you shocked to see that an elderly Nvidia graphics card can beat one of Intel's more recent processors, or did you always know that GPGPU computing was the future? Share your thoughts over in the forums.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.