AMD has taken the wraps off its latest range of graphics cards, revealing the R9 and R7 series.

The full lineup of entry-level to flagship products is lead by the new R9 290X, AMD's fastest ever graphics card, and finishes with the R7 250X, a sub-$89 card.

The new flagship card is the world's first 5 TFLOPS graphics card, marking an 11 percent increase over Nvidia's 4.5TFLOPS GTX Titan card.

The card also features 300GB/sec memory bandwidth, which AMD claims allows for over 100 layers of complex rendering effects in real time. It can also generate 4 billion triangles per second from its geometry engine.

To create all this the card uses 6.2 billion transistors.

As revealed by our sneak peak yesterday, the new card is a typical-looking dual-slot model with a large blower-type cover that features an intriguing array of grooves along it that may be for improved airflow.

The card also uses both a six-pin and an eight-pin auxiliary power input and features two Dual-link DVI video outputs along with a full-size DisplayPort and full-size HDMI.

No CrossFire connectors are present on the card, hinting at a possible connector-less solution for multi-GPU configurations.

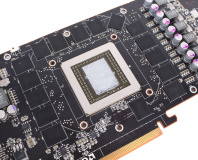

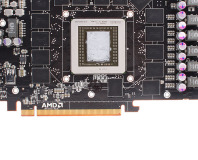

Few details of the chip at the core of the R9 290X have been revealed but we expect to hear more as the announcement progresses.

The full lineup of new cards is as follows:

The full lineup of entry-level to flagship products is lead by the new R9 290X, AMD's fastest ever graphics card, and finishes with the R7 250X, a sub-$89 card.

The new flagship card is the world's first 5 TFLOPS graphics card, marking an 11 percent increase over Nvidia's 4.5TFLOPS GTX Titan card.

The card also features 300GB/sec memory bandwidth, which AMD claims allows for over 100 layers of complex rendering effects in real time. It can also generate 4 billion triangles per second from its geometry engine.

To create all this the card uses 6.2 billion transistors.

As revealed by our sneak peak yesterday, the new card is a typical-looking dual-slot model with a large blower-type cover that features an intriguing array of grooves along it that may be for improved airflow.

The card also uses both a six-pin and an eight-pin auxiliary power input and features two Dual-link DVI video outputs along with a full-size DisplayPort and full-size HDMI.

No CrossFire connectors are present on the card, hinting at a possible connector-less solution for multi-GPU configurations.

Few details of the chip at the core of the R9 290X have been revealed but we expect to hear more as the announcement progresses.

The full lineup of new cards is as follows:

- R7 250 - <$89

- R7 260X - $139

- R9 270X - $199

- R9 280X - $299

- R9 290X

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.