Intel has announced plans to unveil a new optical interconnect for servers at the Intel Developer Forum in San Francisco next month, boasting a peak transfer rate of 1.6Tb/s.

Designed to replace existing inter-server optical interconnects, the new standard is dubbed MXC with Intel boasting of numerous improvements including smaller connectors and, crucially, significantly improved data throughput. While MXC development began two years ago, Intel is only now making it fully public with a planned presentation at the IDF in September.

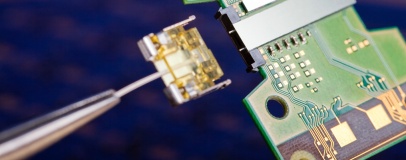

Ahead of the unveiling, the company has published a teaser for the presentation which makes some bold boasts as to the technology's capabilities. Based on Intel's silicon photonics work and glass company Corning's latest-generation optical fibre technology, dubbed ClearCurve LW, Intel claims MXC allows for peak transfer rates over short distances of 1.6Tb/s from a compact connector.

High-speed inter-server communication is critical to high-performance computing. Any given HPC or supercomputer is typically constructed of multiple server nodes operating as a massively parallel cluster - and all the compute performance in the world is of absolutely no use if you can't get the data from one server to another quick enough. Intel has already made significant investments in interconnect technology, picking up Cray's Aries and Gemini and QLogic's InfiniBand last year alone.

A look at InfiniBand, for which Intel paid an impressive $125 million in cash, gives a clue as to just how advanced MXC really is: the top-end 12x EDR InfiniBand link provides 300Gb/s of throughput, around a fifth the peak performance promised by Intel's MXC. The technology also has applications in longer-range communications: while the 1.6Tb/s speed requires very short fibre-optic cabling, the company has tested MXC on ClearCurve LW fibres to 300m with performance of 25Gb/s.

Sadly, Intel is keeping a few things under wraps for the presentation itself - in particular pricing, production schedule and when data centre customers are likely to be able to pick up hardware with MXC connections. Thus far, it's also not clear as to whether Intel will be releasing MXC as a licensable standard for all to use or keeping it an exclusive for Intel-powered servers - although we'd guess it will opt for the former in order to encourage widespread adoption.

Designed to replace existing inter-server optical interconnects, the new standard is dubbed MXC with Intel boasting of numerous improvements including smaller connectors and, crucially, significantly improved data throughput. While MXC development began two years ago, Intel is only now making it fully public with a planned presentation at the IDF in September.

Ahead of the unveiling, the company has published a teaser for the presentation which makes some bold boasts as to the technology's capabilities. Based on Intel's silicon photonics work and glass company Corning's latest-generation optical fibre technology, dubbed ClearCurve LW, Intel claims MXC allows for peak transfer rates over short distances of 1.6Tb/s from a compact connector.

High-speed inter-server communication is critical to high-performance computing. Any given HPC or supercomputer is typically constructed of multiple server nodes operating as a massively parallel cluster - and all the compute performance in the world is of absolutely no use if you can't get the data from one server to another quick enough. Intel has already made significant investments in interconnect technology, picking up Cray's Aries and Gemini and QLogic's InfiniBand last year alone.

A look at InfiniBand, for which Intel paid an impressive $125 million in cash, gives a clue as to just how advanced MXC really is: the top-end 12x EDR InfiniBand link provides 300Gb/s of throughput, around a fifth the peak performance promised by Intel's MXC. The technology also has applications in longer-range communications: while the 1.6Tb/s speed requires very short fibre-optic cabling, the company has tested MXC on ClearCurve LW fibres to 300m with performance of 25Gb/s.

Sadly, Intel is keeping a few things under wraps for the presentation itself - in particular pricing, production schedule and when data centre customers are likely to be able to pick up hardware with MXC connections. Thus far, it's also not clear as to whether Intel will be releasing MXC as a licensable standard for all to use or keeping it an exclusive for Intel-powered servers - although we'd guess it will opt for the former in order to encourage widespread adoption.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.