Researchers at the Massachusetts Institute of Technology have developed an all-optical 'transistor', which they claim could pave the way to optical computers as well as powerful quantum computing systems.

The team from MIT's Research Laboratory of Electronics, in partnership with Harvard University and the Vienna University of Technology, have released details of their experimental realisation of an optical switch which can be controlled using a single photon. As a result, it's possible for light to interact with light - something that isn't normally possible, with two photons meeting each other in a vacuum simply ignoring each other and passing straight on through.

The team is comparing the switch to the electronic transistor, the revolutionary device that replaced the vacuum tube and made possible all the wonderful high-performance computing equipment we enjoy today - claiming that what they have created is the equivalent of a transistor for light, rather than electricity.

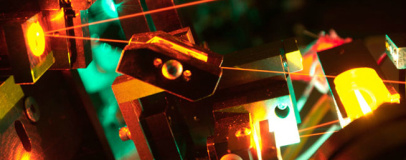

The system takes the form of an optical resonator, a pair of highly reflective mirrors designed to form a switch. 'If you had just one mirror, all the light would come back,' explains Vladan Vuletić, the Lester Wolfe Professor of Physics at MIT, in the establishment's announcement on the research. 'When you have two mirrors, something very strange happens.' When the switch is in the on position, a light beam can pass through both mirrors; when in the off position, the intensity of the beam is reduced by around 80 per cent.

The mirrors achieve this trick by being positioned in such a way that the gap between them is the same as the wavelength of the light. As a result of light's somewhat odd status as being both a wave and a particle, an electromagnetic field builds up between the two mirrors causing the forward-facing mirror to become transparent - but only to that particular wavelength of light.

The team claims that the experimental discovery could lead to mainstream optical computing, in which the electrons of a computer chip are swapped out for photons. As a result, an optical computer would run significantly cooler with much less energy wasted as heat - and, in turn, would draw less power than its electronic equivalent. The system also holds promise for the growing field of quantum computing, where 'qubits' are held in superposition - being both 0 and 1 simultaneously - in order to solve problems in parallel, with photons easier to hold in superposition than electrons.

The team's work is unlikely to leave the lab any time soon, however. The current prototype works by filling the gap between the mirrors with a supercooled cesium gas - not something that really lends itself to be shrunk down and duplicated a few million times to form a modern processor. 'For the classical implementation, this is more of a proof-of-principle experiment showing how it could be done,' admits Vuletić. 'One could imagine implementing a similar device in solid state — for example, using impurity atoms inside an optical fibre or piece of solid.'

MIT's work will likely raise interest from chipmakers, many of whom are investing in optical computing technologies as a response to the growing difficulties in shrinking electronic components to ever-smaller sizes. For now, however, optics are likely to be reserved for inter-chip communications such as with IBM's holey optochip and work being carried out in the Intel co-funded Optoelectronics Systems integration in Silicon Centre at the University of Washington.

The team's paper is published in the most recent issue of the journal Science.

The team from MIT's Research Laboratory of Electronics, in partnership with Harvard University and the Vienna University of Technology, have released details of their experimental realisation of an optical switch which can be controlled using a single photon. As a result, it's possible for light to interact with light - something that isn't normally possible, with two photons meeting each other in a vacuum simply ignoring each other and passing straight on through.

The team is comparing the switch to the electronic transistor, the revolutionary device that replaced the vacuum tube and made possible all the wonderful high-performance computing equipment we enjoy today - claiming that what they have created is the equivalent of a transistor for light, rather than electricity.

The system takes the form of an optical resonator, a pair of highly reflective mirrors designed to form a switch. 'If you had just one mirror, all the light would come back,' explains Vladan Vuletić, the Lester Wolfe Professor of Physics at MIT, in the establishment's announcement on the research. 'When you have two mirrors, something very strange happens.' When the switch is in the on position, a light beam can pass through both mirrors; when in the off position, the intensity of the beam is reduced by around 80 per cent.

The mirrors achieve this trick by being positioned in such a way that the gap between them is the same as the wavelength of the light. As a result of light's somewhat odd status as being both a wave and a particle, an electromagnetic field builds up between the two mirrors causing the forward-facing mirror to become transparent - but only to that particular wavelength of light.

The team claims that the experimental discovery could lead to mainstream optical computing, in which the electrons of a computer chip are swapped out for photons. As a result, an optical computer would run significantly cooler with much less energy wasted as heat - and, in turn, would draw less power than its electronic equivalent. The system also holds promise for the growing field of quantum computing, where 'qubits' are held in superposition - being both 0 and 1 simultaneously - in order to solve problems in parallel, with photons easier to hold in superposition than electrons.

The team's work is unlikely to leave the lab any time soon, however. The current prototype works by filling the gap between the mirrors with a supercooled cesium gas - not something that really lends itself to be shrunk down and duplicated a few million times to form a modern processor. 'For the classical implementation, this is more of a proof-of-principle experiment showing how it could be done,' admits Vuletić. 'One could imagine implementing a similar device in solid state — for example, using impurity atoms inside an optical fibre or piece of solid.'

MIT's work will likely raise interest from chipmakers, many of whom are investing in optical computing technologies as a response to the growing difficulties in shrinking electronic components to ever-smaller sizes. For now, however, optics are likely to be reserved for inter-chip communications such as with IBM's holey optochip and work being carried out in the Intel co-funded Optoelectronics Systems integration in Silicon Centre at the University of Washington.

The team's paper is published in the most recent issue of the journal Science.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.