AMD today announced that it’s ‘optimizing its cost structure’ - a nice way of saying that it’s going to be making 10 per cent of its global workforce redundant while terminating 'existing contractual commitments'.

The reduction in staff is expected to save the company up to $118 million over the course of 2012 which, when added to the $90 million AMD says it’s going to save through ‘operational efficiencies’, means the company should be shaving over $200 million from its costs next year.

AMD has stated that it expects the restructuring plan ‘to take place primarily during the fourth quarter of 2011, with some activites extending into 2012’. Interestingly, it also announced that it expects the plan to actually cost $105 million to implement, a cost presumably made up of redundancy cheques and relocation expenses.

Obviously, AMD are remaining externally upbeat about the whole thing, saying the plan will ‘strengthen the company’s competitive positioning’ and ‘rebalance [its] global workforce skillsets, helping AMD to continue delivering industry-leading products’.

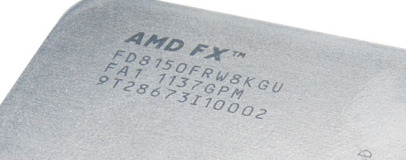

It’s unclear whether this move has been in the works for a while or if it’s a direct result of the worldwide panning that AMDs new Bulldozer based FX processors endured. This coupled with what we’re hearing is low demand for its Llano-based chips (which we really like, by the way) doesn’t make for a rosy outlook for the company.

Do you think AMD's belt-tightening will keep them competitive? Will its new Bulldozer-based Opterons save its bacon? Let us know your thoughts in the forum.

The reduction in staff is expected to save the company up to $118 million over the course of 2012 which, when added to the $90 million AMD says it’s going to save through ‘operational efficiencies’, means the company should be shaving over $200 million from its costs next year.

AMD has stated that it expects the restructuring plan ‘to take place primarily during the fourth quarter of 2011, with some activites extending into 2012’. Interestingly, it also announced that it expects the plan to actually cost $105 million to implement, a cost presumably made up of redundancy cheques and relocation expenses.

Obviously, AMD are remaining externally upbeat about the whole thing, saying the plan will ‘strengthen the company’s competitive positioning’ and ‘rebalance [its] global workforce skillsets, helping AMD to continue delivering industry-leading products’.

It’s unclear whether this move has been in the works for a while or if it’s a direct result of the worldwide panning that AMDs new Bulldozer based FX processors endured. This coupled with what we’re hearing is low demand for its Llano-based chips (which we really like, by the way) doesn’t make for a rosy outlook for the company.

Do you think AMD's belt-tightening will keep them competitive? Will its new Bulldozer-based Opterons save its bacon? Let us know your thoughts in the forum.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.