Turkish tech site Donanim Haber claims to have received some information about the forthcoming Radeon HD 7000 series of GPUs, including news that the chips will support the PCI-E 3 standard.

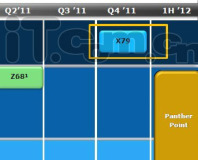

The inclusion of PCI-E 3 support isn’t a huge surprise – MSI has already announced that its Z68A-GD80 (G3) motherboard will support the standard, and Intel’s Ivy Bridge chipset is rumoured to incorporate PCI-E 3 support too.

The PCI-E 3 spec was finalised in November 2010, and it essentially doubles the bandwidth per lane, while remaining backwards compatible with previous generation PCI-E connections.

However, the new spec doesn’t increase the power ceiling, instead just consolidating the existing 150W and 300W standards, despite the fact that some of today's graphics cards already break the 300W limit.

We expect to see the next generation of graphics cards this autumn, so we’ll have to wait a few months to find out what difference is made by PCI-E 3 support.

Do you think the extra bandwidth will net you any more extra performance? Is it wise to keep the 300W power limit, or is this an oversight? Let us know your thoughts in the forums.

The inclusion of PCI-E 3 support isn’t a huge surprise – MSI has already announced that its Z68A-GD80 (G3) motherboard will support the standard, and Intel’s Ivy Bridge chipset is rumoured to incorporate PCI-E 3 support too.

The PCI-E 3 spec was finalised in November 2010, and it essentially doubles the bandwidth per lane, while remaining backwards compatible with previous generation PCI-E connections.

However, the new spec doesn’t increase the power ceiling, instead just consolidating the existing 150W and 300W standards, despite the fact that some of today's graphics cards already break the 300W limit.

We expect to see the next generation of graphics cards this autumn, so we’ll have to wait a few months to find out what difference is made by PCI-E 3 support.

Do you think the extra bandwidth will net you any more extra performance? Is it wise to keep the 300W power limit, or is this an oversight? Let us know your thoughts in the forums.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.