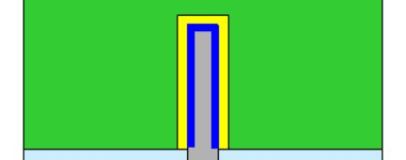

Intel has revealed a brand new type of transistor, which uses a three-dimensional design to operate in a smaller space and consume less power than existing designs.

The new transistors are called Tri-Gate units, in reference to their use of three conductive surfaces. The company claims they offer an overall 50% power saving over current planar transistors, including greatly reduced power leakage when in the off state. Alternatively, the new transistors can deliver 37% faster performance with the same power draw.

The three-dimensional design additionally allows the new transistors to be packed more densely on a silicon wafer than was previously possible, enabling a reduction in the size, and hence price, of future chips.

'The performance gains and power savings of Intel's unique 3-D Tri-Gate transistors are like nothing we've seen before' said Intel senior fellow Mark Bohr. 'The low-voltage and low-power benefits far exceed what we typically see from one process generation to the next.' Despite these advances, Bohr said that Tri-Gate transistor wafers would cost only around 2-3% more to manufacture than 32nm transistor wafers using planar designs.

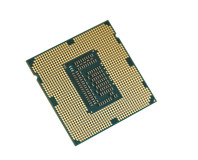

Though the Tri-Gate design was first proposed by Intel engineers back in 2002, it has taken until now to reach high-volume production. The first chips to use the technology will be Intel's forthcoming Ivy Bridge CPUs, the 22nm successors to Sandy Bridge that are expected to arrive at the end of 2011.

Intel then plans to extend the technology across its range, including to the low-power Atom processor series.

Are you looking forward to the performance benefits that these new transistors could bring? How long will it take Intel's competitors to catch up? Let us know your thoughts in the forums.

The new transistors are called Tri-Gate units, in reference to their use of three conductive surfaces. The company claims they offer an overall 50% power saving over current planar transistors, including greatly reduced power leakage when in the off state. Alternatively, the new transistors can deliver 37% faster performance with the same power draw.

The three-dimensional design additionally allows the new transistors to be packed more densely on a silicon wafer than was previously possible, enabling a reduction in the size, and hence price, of future chips.

'The performance gains and power savings of Intel's unique 3-D Tri-Gate transistors are like nothing we've seen before' said Intel senior fellow Mark Bohr. 'The low-voltage and low-power benefits far exceed what we typically see from one process generation to the next.' Despite these advances, Bohr said that Tri-Gate transistor wafers would cost only around 2-3% more to manufacture than 32nm transistor wafers using planar designs.

Though the Tri-Gate design was first proposed by Intel engineers back in 2002, it has taken until now to reach high-volume production. The first chips to use the technology will be Intel's forthcoming Ivy Bridge CPUs, the 22nm successors to Sandy Bridge that are expected to arrive at the end of 2011.

Intel then plans to extend the technology across its range, including to the low-power Atom processor series.

Are you looking forward to the performance benefits that these new transistors could bring? How long will it take Intel's competitors to catch up? Let us know your thoughts in the forums.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.