Intel has announced that "limited quantities" of an experimental processor featuring 48 physical processing cores will be shipping to researchers by the middle of the year.

As reported over on ITworld, the Intel Labs evangelist Sean Hoehl announced the company's plans to provide academic researchers with early versions of its massively multi-core processors as part of the company's Tera-scale research programme - almost certainly a variant on its Single Chip Cloud Computer project.

Whilst hard details as to the precise specifications of the processor weren't made available, engineer Christopher Anderson hinted that each physical core on the chip would clock in at around the same speed as an Atom processor - so we're looking at anything between 1.2GHz and 1.8GHz per core. While that might not sound like much, remember that Intel is building forty-eight of these on a single processor - and there'll be nothing to stop dual- and even quad-processor motherboards being manufactured to accept these new chips.

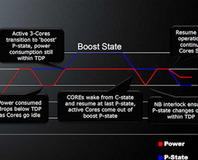

Interestingly, one fact that did come out during the announcement was the power draw of the new chip: depending on workload, the 48-core processor is expected to draw between 25 and 125 Watts - with the massive difference due to the chip's ability to shut down groups of processing cores if they're not actively being used.

The prototype chip is based around the same mesh technology behind the more impressive 80 core version that the Tera-scale research team developed back in 2007, but unlike previous efforts this latest version is a fully working processor - albeit in prototype form.

Sadly, Intel hasn't offered any hints on precisely when processors with this many cores will become a commercial reality - but at least this will give researchers time to develop the tools and skills required to take advantage of such a massively parallel processing platform.

Are you impressed that Intel has made it to a viable 48-core processor already, or are you only going to take notice when the triple-figure chips hit shop shelves? Is the future truly in many low-powered cores, or should the company be concentrating on fewer, higher-speed cores in its processors? Share your thoughts over in the forums.

As reported over on ITworld, the Intel Labs evangelist Sean Hoehl announced the company's plans to provide academic researchers with early versions of its massively multi-core processors as part of the company's Tera-scale research programme - almost certainly a variant on its Single Chip Cloud Computer project.

Whilst hard details as to the precise specifications of the processor weren't made available, engineer Christopher Anderson hinted that each physical core on the chip would clock in at around the same speed as an Atom processor - so we're looking at anything between 1.2GHz and 1.8GHz per core. While that might not sound like much, remember that Intel is building forty-eight of these on a single processor - and there'll be nothing to stop dual- and even quad-processor motherboards being manufactured to accept these new chips.

Interestingly, one fact that did come out during the announcement was the power draw of the new chip: depending on workload, the 48-core processor is expected to draw between 25 and 125 Watts - with the massive difference due to the chip's ability to shut down groups of processing cores if they're not actively being used.

The prototype chip is based around the same mesh technology behind the more impressive 80 core version that the Tera-scale research team developed back in 2007, but unlike previous efforts this latest version is a fully working processor - albeit in prototype form.

Sadly, Intel hasn't offered any hints on precisely when processors with this many cores will become a commercial reality - but at least this will give researchers time to develop the tools and skills required to take advantage of such a massively parallel processing platform.

Are you impressed that Intel has made it to a viable 48-core processor already, or are you only going to take notice when the triple-figure chips hit shop shelves? Is the future truly in many low-powered cores, or should the company be concentrating on fewer, higher-speed cores in its processors? Share your thoughts over in the forums.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.