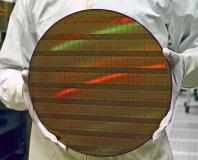

You probably thought that a 45nm transistor was pretty small, but Intel has announced that it’s taken its silicon technology even further into the realms of the infinitesimal today, as the company has just demonstrated the world’s first 32nm processors and announced massive plans for the technology.

The company plans to spend a whopping $7 billion US over the next two years on building the four 32nm fabrication plants, creating 7,000 high-skill jobs in the US. One is already up and running in Oregon, where another plant is scheduled to be running by the end of 2009. Meanwhile, two further fabs will be built in Arizona and New Mexico in 2010.

The 32nm processors are based on the same materials used in Intel’s 45nm chips, using a high-k gate dielectric and a metal gate, as opposed to the old SiO2 dielectric and polysilicon gate used in Intel’s previous 65nm chips. However, Intel was keen to point out that it’s now refined the high-k + metal gate technology, which the company says is now in its second generation.

The refinements include a reduction in the oxide thickness of the high-k dielectric from 1.0nm on a 45nm chip to 0.9nm on a 32nm chip, while the gate length has squeezed down from 35nm to 30nm. As a result of this Intel says that it’s seen performance improvements of over 22 per cent from the new transistors. The company also claims that the second generation high-k + metal gate technology has reduced the source-to-drain leakage even further than the 45nm-generation technology, meaning that the transistors require less power to switch on and off.

Interestingly, Intel also says that the 32nm chips will be made using immersion lithography on ‘critical layers’, meaning that a refractive fluid will fill the gap between the lens and the wafer during the fabrication process. AMD is already using immersion lithography to make its 45nm CPUs, but Intel has so far used dry lithography on its 45nm CPUs.

Commenting on the manufacturing facilities, Intel’s CEO Paul Otellini said that the factories would "produce the most advanced computing technology in the world." He added that "the chips they produce will become the basic building blocks of the digital world, generating economic returns far beyond our industry."

Intel says that its first 32nm chips will be ready for production in the fourth quarter of this year, and has announced a number of new products that will be based on the technology.

Got a thought on the announcement? Discuss in the forums.

The company plans to spend a whopping $7 billion US over the next two years on building the four 32nm fabrication plants, creating 7,000 high-skill jobs in the US. One is already up and running in Oregon, where another plant is scheduled to be running by the end of 2009. Meanwhile, two further fabs will be built in Arizona and New Mexico in 2010.

The 32nm processors are based on the same materials used in Intel’s 45nm chips, using a high-k gate dielectric and a metal gate, as opposed to the old SiO2 dielectric and polysilicon gate used in Intel’s previous 65nm chips. However, Intel was keen to point out that it’s now refined the high-k + metal gate technology, which the company says is now in its second generation.

The refinements include a reduction in the oxide thickness of the high-k dielectric from 1.0nm on a 45nm chip to 0.9nm on a 32nm chip, while the gate length has squeezed down from 35nm to 30nm. As a result of this Intel says that it’s seen performance improvements of over 22 per cent from the new transistors. The company also claims that the second generation high-k + metal gate technology has reduced the source-to-drain leakage even further than the 45nm-generation technology, meaning that the transistors require less power to switch on and off.

Interestingly, Intel also says that the 32nm chips will be made using immersion lithography on ‘critical layers’, meaning that a refractive fluid will fill the gap between the lens and the wafer during the fabrication process. AMD is already using immersion lithography to make its 45nm CPUs, but Intel has so far used dry lithography on its 45nm CPUs.

Commenting on the manufacturing facilities, Intel’s CEO Paul Otellini said that the factories would "produce the most advanced computing technology in the world." He added that "the chips they produce will become the basic building blocks of the digital world, generating economic returns far beyond our industry."

Intel says that its first 32nm chips will be ready for production in the fourth quarter of this year, and has announced a number of new products that will be based on the technology.

Got a thought on the announcement? Discuss in the forums.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.