CES 2009: AMD President and CEO Dirk Meyer demonstrated the next era of cloud computing, the HD Cloud, during his keynote address at CES.

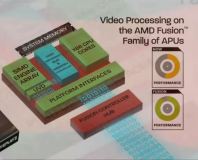

Meyer introduced the Fusion Render Cloud reference design, a GPU super computer designed to break the one petaFLOPS barrier with more than 1,000 graphics processors.

"We anticipate it to be the fastest super computer ever and it will be powered by OTOY's software for a singular purpose: to make HD cloud computing a reality," said Meyer. "We plan to have this system ready by the second half of 2009."

The Fusion Render Cloud will break one petaFLOPS with a tenth of the power consuption of the world's fastest super computer today and it requires a significantly smaller footprint than a conventional super computer - it will fit in a single room rather than requiring a whole building. What's more, the Fusion Render Cloud will be upgradeable unlike virtually any other super computer so AMD anticipates further increased performance in the future.

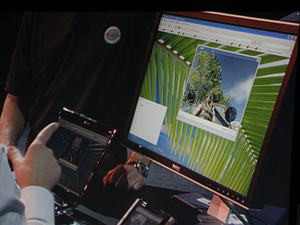

That's all well and good, but what would you use a 1,000+ GPU super computer for? Well, it's designed to run HD content over the Internet (or cloud) through your web browser. Meyer demonstrated full-resolution Blu-ray movie playback through a web browser before moving on to show Mercenaries 2 running without any lag whatsoever in the same web browser.

The demonstration was polished and impressive - it's possible to move the browser window around while playing the game without any adverse effects on the gaming experience. That has little to no real world use, but it was designed to prove that it wasn't just a canned demo being shown.

Meyer invited Richard Hilleman, Chief Creative Officer at Electronic Arts, on stage after the Mercenaries 2 demo. "At Electronic Arts, we have been lucky enough to be a part of the creation of a number of changes in the world of personal computer gaming. From the first PCs to CD gaming to the advent of Internet gaming, we have embraced each new evolution of technology as an opportunity to bring new experiences to our customers," he said. "OTOY and AMD are at the cutting edge of thin client gaming, and we look forward to the new customers we can reach and the new interactive expressions that emerge from revolutionary technology like the AMD Fusion Render Cloud."

There's no denying this is a massive step forwards for cloud computing, but we have a few concerns - the biggest is that there was no details on how much bandwidth the HD cloud will require to achieve experiences like this through the cloud. The demo was run across a wired network and not through the Internet which makes us wonder just how steep the requirements are. Nevertheless, that doesn't take away from how impressive this demo was.

Discuss in the forums.

Meyer introduced the Fusion Render Cloud reference design, a GPU super computer designed to break the one petaFLOPS barrier with more than 1,000 graphics processors.

"We anticipate it to be the fastest super computer ever and it will be powered by OTOY's software for a singular purpose: to make HD cloud computing a reality," said Meyer. "We plan to have this system ready by the second half of 2009."

The Fusion Render Cloud will break one petaFLOPS with a tenth of the power consuption of the world's fastest super computer today and it requires a significantly smaller footprint than a conventional super computer - it will fit in a single room rather than requiring a whole building. What's more, the Fusion Render Cloud will be upgradeable unlike virtually any other super computer so AMD anticipates further increased performance in the future.

That's all well and good, but what would you use a 1,000+ GPU super computer for? Well, it's designed to run HD content over the Internet (or cloud) through your web browser. Meyer demonstrated full-resolution Blu-ray movie playback through a web browser before moving on to show Mercenaries 2 running without any lag whatsoever in the same web browser.

The demonstration was polished and impressive - it's possible to move the browser window around while playing the game without any adverse effects on the gaming experience. That has little to no real world use, but it was designed to prove that it wasn't just a canned demo being shown.

Meyer invited Richard Hilleman, Chief Creative Officer at Electronic Arts, on stage after the Mercenaries 2 demo. "At Electronic Arts, we have been lucky enough to be a part of the creation of a number of changes in the world of personal computer gaming. From the first PCs to CD gaming to the advent of Internet gaming, we have embraced each new evolution of technology as an opportunity to bring new experiences to our customers," he said. "OTOY and AMD are at the cutting edge of thin client gaming, and we look forward to the new customers we can reach and the new interactive expressions that emerge from revolutionary technology like the AMD Fusion Render Cloud."

There's no denying this is a massive step forwards for cloud computing, but we have a few concerns - the biggest is that there was no details on how much bandwidth the HD cloud will require to achieve experiences like this through the cloud. The demo was run across a wired network and not through the Internet which makes us wonder just how steep the requirements are. Nevertheless, that doesn't take away from how impressive this demo was.

Discuss in the forums.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.