Microsoft hides cloud latency with DeLorean

August 22, 2014 | 10:47

Companies: #microsoft #microsoft-research #onlive

Microsoft's research arm claims it may have found a way to solve the barrier preventing mass adoption of cloud gaming: the latency between the server and the client.

Cloud gaming has been poised to be the next big thing for a number of years, now. While Nvidia has enjoyed some success with its Grid platform - servers packed with GeForce GPU hardware - and client-facing platforms like OnLive continue to roll on, cloud gaming is still a poor second best to local gaming. The premise, using remote server hardware to do the heavy lifting so you can play the latest PC games on low-end - even mobile - hardware is sound, but the delay between inputting your actions at your end and the server responding means that twitch-gaming is out of the question.

Microsoft Research's answer? Time travel, via what its creators have dubbed DeLorean. In a white paper published this week, a team lead by Kyungmin Lee has detailed what they are calling a 'speculative execution system' which is capable of hiding up to 250ms of network latency from the user - perfect for high-latency mobile networks. DeLorean works by rendering possible outcomes ahead of time, then displaying only the correct outcome to the user.

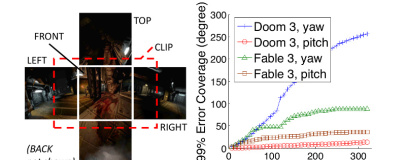

'To evaluate the prediction and speculation techniques in DeLorean, we use two high quality, commercially-released games: a twitch-based first person shooter, Doom 3, and an action role playing game, Fable 3,' the team writes in the paper's abstract. 'Through user studies and performance benchmarks, we find that players overwhelmingly prefer DeLorean to traditional thin-client gaming where the network RTT is fully visible, and that DeLorean successfully mimics playing across a low-latency network.'

The paper itself (PDF warning) goes into the technical details, but it's not clear when - or if - the technology will reach consumers. The team has, however, published the binaries for the DeLorean software, along with videos demonstrating its use.

Cloud gaming has been poised to be the next big thing for a number of years, now. While Nvidia has enjoyed some success with its Grid platform - servers packed with GeForce GPU hardware - and client-facing platforms like OnLive continue to roll on, cloud gaming is still a poor second best to local gaming. The premise, using remote server hardware to do the heavy lifting so you can play the latest PC games on low-end - even mobile - hardware is sound, but the delay between inputting your actions at your end and the server responding means that twitch-gaming is out of the question.

Microsoft Research's answer? Time travel, via what its creators have dubbed DeLorean. In a white paper published this week, a team lead by Kyungmin Lee has detailed what they are calling a 'speculative execution system' which is capable of hiding up to 250ms of network latency from the user - perfect for high-latency mobile networks. DeLorean works by rendering possible outcomes ahead of time, then displaying only the correct outcome to the user.

'To evaluate the prediction and speculation techniques in DeLorean, we use two high quality, commercially-released games: a twitch-based first person shooter, Doom 3, and an action role playing game, Fable 3,' the team writes in the paper's abstract. 'Through user studies and performance benchmarks, we find that players overwhelmingly prefer DeLorean to traditional thin-client gaming where the network RTT is fully visible, and that DeLorean successfully mimics playing across a low-latency network.'

The paper itself (PDF warning) goes into the technical details, but it's not clear when - or if - the technology will reach consumers. The team has, however, published the binaries for the DeLorean software, along with videos demonstrating its use.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.