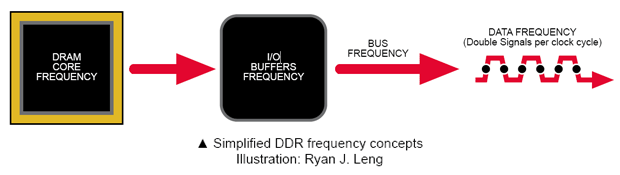

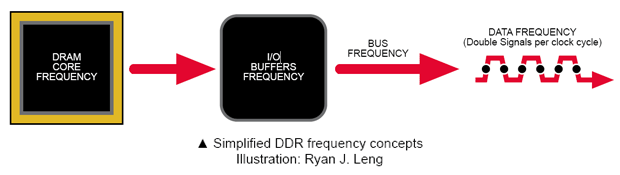

There are few distinctive yet important concepts of speed to keep in mind. They are the DRAM core frequency, Input Output (IO) Buffer Frequency, Memory Bus Frequency and the Data Frequency. They are used to describe the performance level in different areas of the memory system.

All computer memory modules are rated with the Data Frequency. For example, the “800” figure in DDR2-800 describes the module’s ability to operate at a Data Frequency of 800MHz. On the other hand, the IO Buffer and Bus frequency will be running at 400MHz, while the DRAM core frequency is only operating at 200MHz.

The relationship between frequency (MHz) and data throughput (Mbps) is simply this. One single piece of signal is 1bit of data: a 0 or 1. This data is shown as a circular black “Action” dot in the diagram below. 800MHz data frequency means that it will send 1bit of data at 800 million times per second, therefore the product of both is equals to 800 Megabits per second (Mbps). (NB: Remember that bits and bytes are different: 8bits = 1byte so the previous 800Mbps makes only 100MBps or MegaBytes per second).

With a given Data Frequency, actual or effective data throughput is lower than expected. For example, DDR2 at 800MHz does not uses all that frequency purely for data flow. Instead, certain amount of frequency is required for command and control signalling. It is a bit like buying a 160GB Hard Disk and after formatting it, only 149GB is available for your data. This is due to a portion of the drive being occupied by the system level information such as Master Boot Record (MBR) and Partition Tables.

For some enthusiasts and purists, this is a great reason to overclock the memory to higher frequency to bring the data throughput closer to or beyond 800MHz data flow. This can be better achieved with DDR2 modules specified at 1066MHz.

On Symmetrical Dual-Channel chipsets, placing two memory modules in slots with the same colour will automatically give the user Dual-Channel performance, however using 3 memory modules on a 4-slot motherboard will switch the motherboard back to Single-Channel mode. Asymmetrical Dual-Channel chipsets are capable of operating at Dual-Channel mode with 3 DIMMs, effectively always giving the user 128bit memory performance.

The current desktop based DDR memory technology is unable to support more than 2 DIMMs per channel, but server and workstation based FB-DIMM (Fully Buffered DIMM) memory controller (for Intel CPUs) is designed with 8 DIMMs per channel in mind. These high-end computers are usually equipped with quad-channel configuration, requiring a minimum of four DIMMs to use it.

Each standard desktop memory module supports a 64-bit data path, while server based memory modules utilize 72-bit data per channel: the additional 8-bits are used for Error Correcting Codes (ECC). Registered and FB-DIMMs are much more expensive than the standard desktop based Unbuffered DIMMs due to the added complexities for extra performance and error correction characteristics.

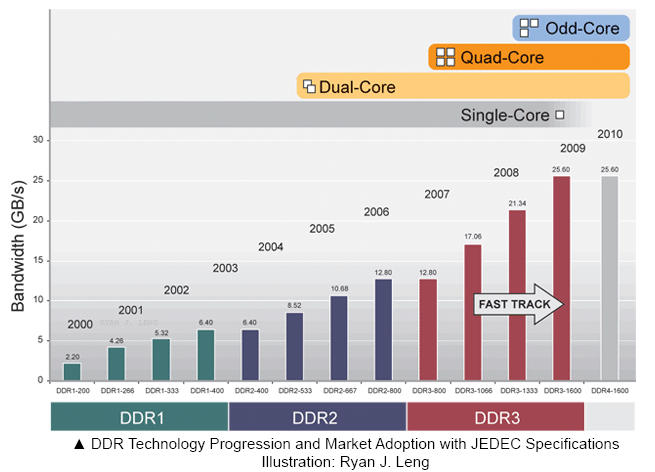

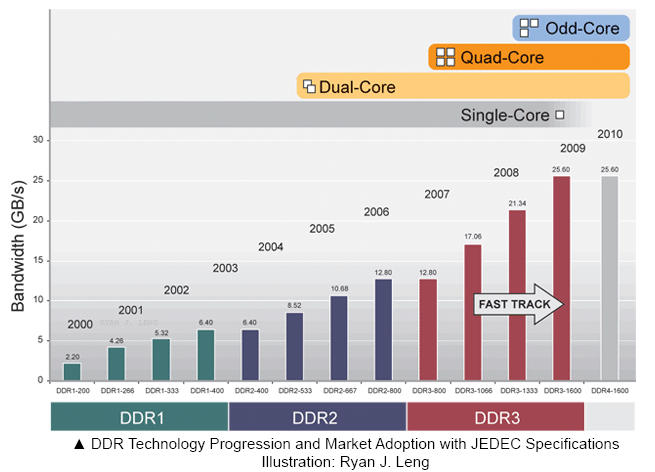

The following chart illustrates this phenomenon. Moore’s Law describes that the CPU processing ability doubles every 18 months. David “Dadi” Perlmutter, head of the Core team at Intel classify it as “a simple description of an economic and technological pace". The efficiency of the computer relies heavily on the memory system in keeping up with improvements on the CPU side.

Single-core CPUs used to simply increase performance by raising the processing frequency within a generation, although inter-generation there were architectural advancements as transistor counts could be increased. In 2004-2005, a new desktop CPU design changed the dynamics of processor improvements from a single factor of raw speed into two factors: processing speed and core-duplication.

Subsequent CPU performance increases therefore rely upon improvements in core frequency, the number of cores within a processor packaging, caching technology with more advance predictive and pre-fetch optimisation as well as improved bus efficiency to avoid bottlenecks. The data prediction and pre-fetch algorithms for memory access have major impact on processor efficiency. Other factors include the way L1, L2 (and L3) caches are used and their associated algorithms and access allocations.

All computer memory modules are rated with the Data Frequency. For example, the “800” figure in DDR2-800 describes the module’s ability to operate at a Data Frequency of 800MHz. On the other hand, the IO Buffer and Bus frequency will be running at 400MHz, while the DRAM core frequency is only operating at 200MHz.

The relationship between frequency (MHz) and data throughput (Mbps) is simply this. One single piece of signal is 1bit of data: a 0 or 1. This data is shown as a circular black “Action” dot in the diagram below. 800MHz data frequency means that it will send 1bit of data at 800 million times per second, therefore the product of both is equals to 800 Megabits per second (Mbps). (NB: Remember that bits and bytes are different: 8bits = 1byte so the previous 800Mbps makes only 100MBps or MegaBytes per second).

With a given Data Frequency, actual or effective data throughput is lower than expected. For example, DDR2 at 800MHz does not uses all that frequency purely for data flow. Instead, certain amount of frequency is required for command and control signalling. It is a bit like buying a 160GB Hard Disk and after formatting it, only 149GB is available for your data. This is due to a portion of the drive being occupied by the system level information such as Master Boot Record (MBR) and Partition Tables.

For some enthusiasts and purists, this is a great reason to overclock the memory to higher frequency to bring the data throughput closer to or beyond 800MHz data flow. This can be better achieved with DDR2 modules specified at 1066MHz.

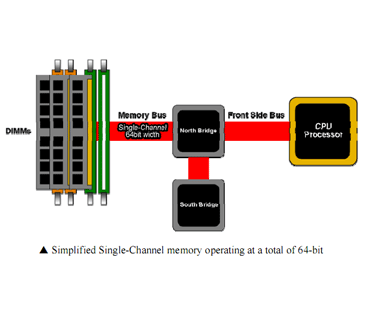

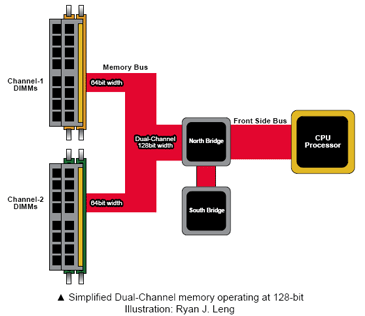

Dual-Channel and Single-Channel modes

Another significant innovation of DDR was the ability to use Dual-Channel configuration instead of the traditional Single-Channel memory bus. This design improves memory performance in a major way. Only very few current chipsets for motherboards do not support this feature.On Symmetrical Dual-Channel chipsets, placing two memory modules in slots with the same colour will automatically give the user Dual-Channel performance, however using 3 memory modules on a 4-slot motherboard will switch the motherboard back to Single-Channel mode. Asymmetrical Dual-Channel chipsets are capable of operating at Dual-Channel mode with 3 DIMMs, effectively always giving the user 128bit memory performance.

The current desktop based DDR memory technology is unable to support more than 2 DIMMs per channel, but server and workstation based FB-DIMM (Fully Buffered DIMM) memory controller (for Intel CPUs) is designed with 8 DIMMs per channel in mind. These high-end computers are usually equipped with quad-channel configuration, requiring a minimum of four DIMMs to use it.

Each standard desktop memory module supports a 64-bit data path, while server based memory modules utilize 72-bit data per channel: the additional 8-bits are used for Error Correcting Codes (ECC). Registered and FB-DIMMs are much more expensive than the standard desktop based Unbuffered DIMMs due to the added complexities for extra performance and error correction characteristics.

CPU and Bandwidth Growth

The memory system has a direct relationship with the evolution of CPU. As more powerful processors permeate into the market, more memory bandwidth is required to keep up with the CPU processing speed. A slow memory system will not be able to feed sufficient data to a fast CPU, causing the processor to be idle while waiting for more data to arrive. The objective of the memory system is to store and retrieve large amounts of data for different jobs in the shortest possible time. Without an equally fast memory system, the CPU will be underutilised and inefficient.The following chart illustrates this phenomenon. Moore’s Law describes that the CPU processing ability doubles every 18 months. David “Dadi” Perlmutter, head of the Core team at Intel classify it as “a simple description of an economic and technological pace". The efficiency of the computer relies heavily on the memory system in keeping up with improvements on the CPU side.

Single-core CPUs used to simply increase performance by raising the processing frequency within a generation, although inter-generation there were architectural advancements as transistor counts could be increased. In 2004-2005, a new desktop CPU design changed the dynamics of processor improvements from a single factor of raw speed into two factors: processing speed and core-duplication.

Subsequent CPU performance increases therefore rely upon improvements in core frequency, the number of cores within a processor packaging, caching technology with more advance predictive and pre-fetch optimisation as well as improved bus efficiency to avoid bottlenecks. The data prediction and pre-fetch algorithms for memory access have major impact on processor efficiency. Other factors include the way L1, L2 (and L3) caches are used and their associated algorithms and access allocations.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.