Researchers demo real-time speech-recognition brain-computer interface

July 31, 2019 | 11:23

Companies: #facebook #university-of-california-at-san-francisco

Facebook-funded researchers have demonstrated the ability to transcribe brain waves into speech in real-time - though the process is ever-so-slightly invasive.

Being able to control a computer system by thought alone is a holy grail of human-computer interaction, not only for the simplicity of such an interface but in how it could improve the lives of people suffering from a range of conditions which preclude the use of keyboards, mice, and touchscreens. Earlier this year researchers at the University of California at San Francisco (UCSF) unveiled a method of generating synthetic speech from brain recordings, suggesting that it's possible to monitor the part of the brain responsible for speech and decode it with a computer - the only real drawback being that the process took weeks or months to finish transcribing.

Now, a team of scientists has built on that work and accelerated it to real-time transcription - meaning it is possible, in theory at least, for the system to decode speech from brain waves as the subject is attempting to talk.

'For years, my lab was mainly interested in fundamental questions about how brain circuits interpret and produce speech,' explains speech neuroscientist Professor Eddie Chang. 'With the advances we’ve seen in the field over the past decade it became clear that we might be able to leverage these discoveries to help patients with speech loss, which is one of the most devastating consequences of neurological damage. Currently, patients with speech loss due to paralysis are limited to spelling words out very slowly using residual eye movements or muscle twitches to control a computer interface, but in many cases, information needed to produce fluent speech is still there in their brains. We just need the technology to allow them to express it.'

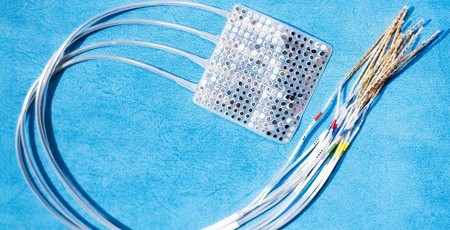

The team's work, which at present has been limited to analysing the brain waves of subjects with normal speech capabilities, isn't exactly non-invasive: Subjects are required to have electrodes for electrocorticography (ECoG) placed on the surface of their brain, providing more detailed readings than available from a electroencephalograph (EEG) or functional magnetoresonance imaging (fMRI) scan - something project lead Dr. David Moses and his team achieved by finding subjects who had already had ECoG sensors implanted in preparation for impending neurosurgery.

The acceleration comes, Moses explains, by pairing a novel set of machine learning algorithms with context data to help narrow the range of likely solutions to the transcription problem. It's fast, but it has drawbacks: 'Real-time processing of brain activity has been used to decode simple speech sounds, but this is the first time this approach has been used to identify spoken words and phrases,' Dr. Moses explains. 'It’s important to keep in mind that we achieved this using a very limited vocabulary, but in future studies we hope to increase the flexibility as well as the accuracy of what we can translate from brain activity.'

The team's study forms part of a multi-institution research programme sponsored by Facebook Reality Labs (FRL), a research arm of the social media giant focused on the development of augmented and virtual reality (AR and VR) technologies. The ultimate goal from FRL's end is the development of a non-invasive brain-computer interface (BCI) which would allow the wearer to enter text into a computer system simply by imagining saying things out loud.

More information is available on the UCSF website, while the team's paper has been published under open access terms in the journal Nature Communications.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.