Intel has revealed the system requirements for running its delayed Optane non-volatile memory devices, and if your system isn't Kaby Lake or newer you're going to be disappointed.

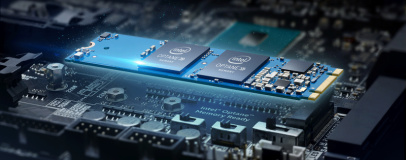

Intel first unveiled Optane in 2015, promising that the technology would launch the year after - a date the company has since missed. Developed in partnership with memory specialist Micron, Optane is an implementation of 3D Xpoint technology and an attempt at bridging the gap between fast-but-small volatile DRAM and slow-but-capacious non-volatile mass storage. At the time, Intel claimed Optane would offer performance similar to dynamic RAM (DRAM) but with the benefit of keeping its data when the system is powered off, like a traditional solid-state drive (SSD).

While similar non-volatile memory products have been launched by competing companies, Intel became the first to promise a product range that would benefit not only the server market but also desktop users. During the Intel Developer Forum in 2015, Intel head Brian Krzanich revealed a slide demonstrating that an Optane-equipped desktop PC running a sixth-generation Skylake processor could expect to see performance gains in gaming applications - but now that it's getting closer to launching the devices, Skylake support appears to have been dropped.

According to a freshly launched microsite detailing the company's plans for the 3D XPoint technology, Optane will require a seventh-generation Kaby Lake processor at minimum. Additionally, anyone looking to add Optane into their system will need an Intel 200-series chipset motherboard with an M.2 type 2280-S1-B-M or 2242-S1-B-M storage connector linked to a PCH Remapped PCI-E controller with two or four lanes and B-M keys meeting Non Volatile Memory Express (NVMe) v1.1 standards. Said motherboard will also need to have a BIOS supporting Intel's Rapid Storage Technology (RST) driver version 15.5 or above.

While Intel is clearly getting ready to launch its Optane modules for the desktop, the company is keeping quiet on exactly when the devices will hit retail and, critically, how much they will cost.

Intel first unveiled Optane in 2015, promising that the technology would launch the year after - a date the company has since missed. Developed in partnership with memory specialist Micron, Optane is an implementation of 3D Xpoint technology and an attempt at bridging the gap between fast-but-small volatile DRAM and slow-but-capacious non-volatile mass storage. At the time, Intel claimed Optane would offer performance similar to dynamic RAM (DRAM) but with the benefit of keeping its data when the system is powered off, like a traditional solid-state drive (SSD).

While similar non-volatile memory products have been launched by competing companies, Intel became the first to promise a product range that would benefit not only the server market but also desktop users. During the Intel Developer Forum in 2015, Intel head Brian Krzanich revealed a slide demonstrating that an Optane-equipped desktop PC running a sixth-generation Skylake processor could expect to see performance gains in gaming applications - but now that it's getting closer to launching the devices, Skylake support appears to have been dropped.

According to a freshly launched microsite detailing the company's plans for the 3D XPoint technology, Optane will require a seventh-generation Kaby Lake processor at minimum. Additionally, anyone looking to add Optane into their system will need an Intel 200-series chipset motherboard with an M.2 type 2280-S1-B-M or 2242-S1-B-M storage connector linked to a PCH Remapped PCI-E controller with two or four lanes and B-M keys meeting Non Volatile Memory Express (NVMe) v1.1 standards. Said motherboard will also need to have a BIOS supporting Intel's Rapid Storage Technology (RST) driver version 15.5 or above.

While Intel is clearly getting ready to launch its Optane modules for the desktop, the company is keeping quiet on exactly when the devices will hit retail and, critically, how much they will cost.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.