Nvidia has officially launched the full-fat version of its Volta graphics processing architecture, following its appearance in an automotive-focused system-on-chip (SoC) design, but if you're eager to get your hands on it for gaming you're going to have to wait: its first outing is, unsurprisingly, in a high-end accelerator board for the data centre.

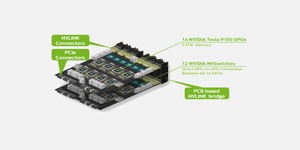

The latest processor to come out of Nvidia, the Volta-based V100 isn't your average general-purpose graphics processing unit (GPGPU). As well as 5,120 CUDA processing cores, the chip includes 640 Tensor Cores designed to accelerate deep-learning and other artificial intelligence workloads - the equivalent, the company claims, of spreading such workloads across 100 CPU cores per GPU. Combined with a next-generation NVLink connection boasting twice the throughput of its predecessor and High Bandwidth Memory 2 (HBM2) memory with a 50 percent throughput boost, Nvidia claims each board can offer 120 trillion floating point operations per second (teraFLOPS) of compute performance when the workload can be accelerated through the Tensor Cores.

'Artificial intelligence is driving the greatest technology advances in human history,' claimed Jensen Huang, Nvidia founder and chief executive, during a keynote speech unveiling the architecture at the company's annual GPU Technology Conference (GTC). 'It will automate intelligence and spur a wave of social progress unmatched since the industrial revolution. Deep learning, a groundbreaking AI approach that creates computer software that learns, has insatiable demand for processing power. Thousands of Nvidia engineers spent over three years crafting Volta to help meet this need, enabling the industry to realise AI's life-changing potential.'

While the Tesla V100 card itself is likely of interest only to those building deep-learning systems, the keynote also revealed some key features of the Volta architecture beyond just core count and a 21-billion transistor total. According to Nvidia's internal testing, Volta offers a five-fold improvement in performance over current-generation Pascal in raw compute without the Tensor Cores' help and 15-fold over the two-year-old Maxwell architecture - four times greater, the company crows, than Moore's Law would predict.

More information on the Tesla V100 and Volta architecture can be found on the company's official website.

The latest processor to come out of Nvidia, the Volta-based V100 isn't your average general-purpose graphics processing unit (GPGPU). As well as 5,120 CUDA processing cores, the chip includes 640 Tensor Cores designed to accelerate deep-learning and other artificial intelligence workloads - the equivalent, the company claims, of spreading such workloads across 100 CPU cores per GPU. Combined with a next-generation NVLink connection boasting twice the throughput of its predecessor and High Bandwidth Memory 2 (HBM2) memory with a 50 percent throughput boost, Nvidia claims each board can offer 120 trillion floating point operations per second (teraFLOPS) of compute performance when the workload can be accelerated through the Tensor Cores.

'Artificial intelligence is driving the greatest technology advances in human history,' claimed Jensen Huang, Nvidia founder and chief executive, during a keynote speech unveiling the architecture at the company's annual GPU Technology Conference (GTC). 'It will automate intelligence and spur a wave of social progress unmatched since the industrial revolution. Deep learning, a groundbreaking AI approach that creates computer software that learns, has insatiable demand for processing power. Thousands of Nvidia engineers spent over three years crafting Volta to help meet this need, enabling the industry to realise AI's life-changing potential.'

While the Tesla V100 card itself is likely of interest only to those building deep-learning systems, the keynote also revealed some key features of the Volta architecture beyond just core count and a 21-billion transistor total. According to Nvidia's internal testing, Volta offers a five-fold improvement in performance over current-generation Pascal in raw compute without the Tensor Cores' help and 15-fold over the two-year-old Maxwell architecture - four times greater, the company crows, than Moore's Law would predict.

More information on the Tesla V100 and Volta architecture can be found on the company's official website.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.